If you live in the modern world (Where else would you live if you’re reading this article? I suppose you might be an internet archaeologist from the future? Or maybe you’re an alien?), the answer to the title of this blog is, “yes”, because the algorithms control many aspects of our lives. It’s quite shocking once you realise how deeply they’re entrenched in our lives and, consequently, how deeply they control us. Heck, they can even control our thoughts to some degree.

What is an algorithm?

Before we explore how they manipulate us, we need to understand what an algorithm is. An algorithm is a set of rules/instructions that a computer uses to do a task. This task could be anything from adding two numbers together to working out which youtube video is about to go viral.

Once they’re set in motion, they operate independently of human interference for the most part. Often, they’re making decisions for us that we aren’t even privy to anymore. For example, job portals will have algorithms that filter people’s CVs. That means that the algorithm will be removing candidates or moving them to the next stage without an actual person ever reading the CV.

Are algorithms bad?

Algorithms are neither bad nor good – they’re neutral. The way people programme them and the reason for their creation can sometimes be bad but, at the same time, can also be good. They can speed up processes and procedures, freeing up our time for other things. For example, in the case of a job portal CV algorithm, they free up the employer’s time. On the downside, the algorithm could be inadvertently filtering out good candidates.

And this inconsistency is true of other types of algorithms too, e.g., dating apps (they can find you good matches, but also filter out good matches by accident), internet search engines (they can show you the content you’re searching for, or they can force you to wade through mountains of non-relevant results), banks (they can show you which loans you’re eligible for, but can also filter out loans that they wrongly exclude you for)…

Who’s writing the algorithm and why?

One question to ask when deciding if algorithms are bad is to ask, “Who’s writing the algorithm, and why are they writing it?”.

It’s human nature to have biases and blind spots in our thinking. Therefore, much of the technology we create has bias and blind spots built-in, and it’s particularly obvious with algorithms.

People have this idea that algorithms are free from bias because they’re machines: they don’t make judgements in the same way we do, and they don’t have emotions, so how could they possibly be biased? But this idea fails to see how algorithms have in-built biases.

Accidental/overlooked bias in algorithms

For example, imagine I’m a doctor and I want to find out how many people in my catchment area have been ill with a cold in the last 2-months. One way I might choose to do this is to create an algorithm that searches patient records to find people who’ve been ill with colds in that period. This sounds easy and straightforward, but it neglects a huge problem: men are less likely than women to seek help for medical problems. That means, before it’s even started, the algorithm is biased. Any findings that go on to inform later research are built on inaccurate data. It’s not really capturing a true reflection of who’s been ill with colds because the data’s flawed.

Alternatively, a college-admission algorithm that’s looking for new prospective students may be programmed to look for people who live in a certain area of a city (e.g., one with high-income families). It means that the program will not target people from lower-income brackets and so fewer of them will apply for the college and, eventually, fewer lower-income students will form part of the student body. The group who created the algorithm put their own bias (intentionally or unintentionally) into it and this had a real-world effect on inequality.

Or a loan-application algorithm might reject anyone below a certain income bracket for a £10k+ loan but fails to account for people who fall outside some norm. For example, the app might reject anyone who earns £18k-a-year. But this could miss people who can afford the loan. What if the person has very few living expenses? They may have as much disposable income as a person on £50k-a-year and can easily afford repayments on a £10k loan, yet they get rejected. This oversight means that both the banks and the customer lose out.

Bad actors exploiting people through algorithms

But it’s worse than that. The above two examples aren’t necessarily intentional, but there are some bad actors out there. They write algorithms for a variety of reasons. For instance, it’s fairly well-known that during political elections, people write algorithms that target fence-sitters. They write algorithms for social media aimed at finding people who haven’t decided their vote yet. Then they target them with propaganda intended to swing them to one side or the other. These people are doing it on purpose, and it’s been extremely effective in recent years.

We recently wrote an article about gambling firms who targeted problem gamblers with tempting ads. That was made possible through the algorithms they used, which found gambling addicts and offered them promotions they would find difficult to refuse.

Unintended consequences for algorithm creators

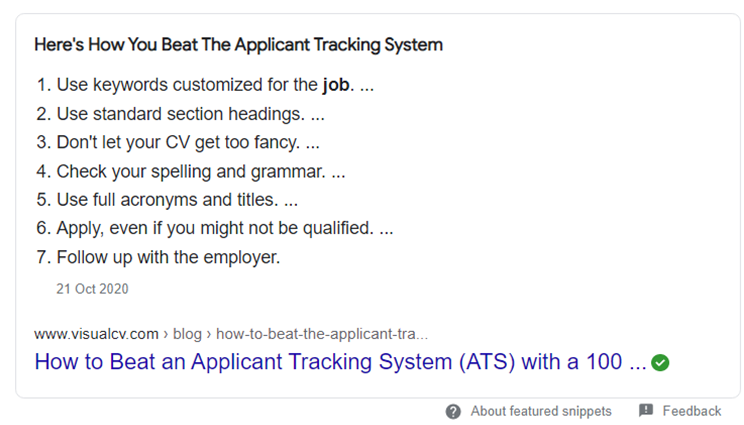

As well as algorithms potentially being bad for the people they target, they can be bad for the companies who use them. For example, job application algorithms are meant to make it easier for employers to find suitable candidates. However, what can often happen is that they weed out the people who know about algorithms and how to beat them from the people who don’t. The best candidates may not be getting through at all, just the people who know how to crack the system. You can even pay people to write your application for you! Just google, “How to beat job application algorithms” and you get this:

The same happens with Google’s SEO algorithms. They’re supposed to show us the best content for the keyword/search term we used, but instead, they can end up showing us the content of whoever’s managed to crack the SEO algorithm.

Summary: Do algorithms control our lives?

Algorithms do control our lives and their hold is becoming more entrenched over time. They’re everywhere, from air traffic control to schools, from telling you which book to read next to manipulation of your political views. They do a lot of good and help us to organise an ever-complex world. They can serve us up the best online content, can match us up with the perfect partner, can help us to tackle pandemics, etc.

But they also come with the host of problems outlined above. Perhaps what we need is some sort of oversight? Medicine has NICE, our utility companies have Ofgem, Ofcom, and Ofwat. In fact, there’s oversight for all sorts of industries and technologies, so why not for the algorithms?